import numpy as np

import matplotlib.pyplot as plt

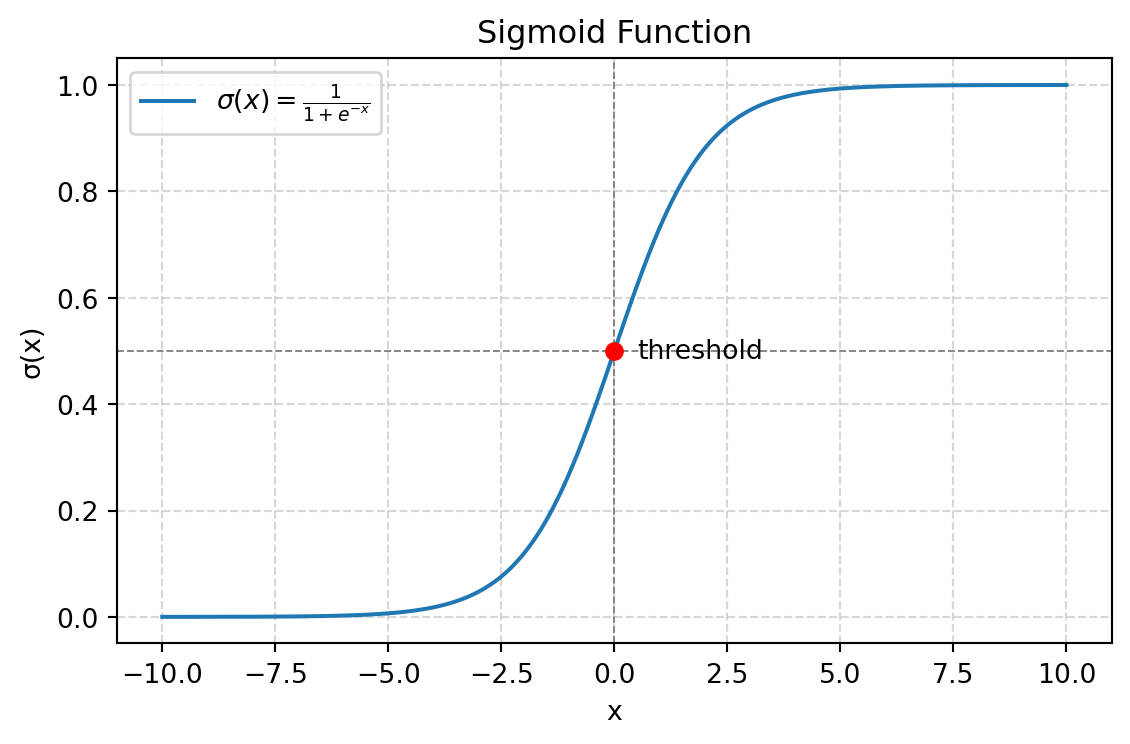

def sigmoid(x: np.ndarray) -> np.ndarray:

return 1 / (1 + np.exp(-x))

x = np.linspace(-10, 10, 400)

y = sigmoid(x)

plt.figure(figsize=(6, 4))

plt.plot(x, y, label=r'$\sigma(x) = \frac{1}{1 + e^{-x}}$')

plt.axvline(0, color='gray', lw=0.7, ls='--')

plt.axhline(0.5, color='gray', lw=0.7, ls='--')

plt.scatter([0], [0.5], color='red', zorder=5)

plt.text(0.5, 0.5, 'threshold', va='center')

plt.xlabel('x')

plt.ylabel('σ(x)')

plt.title('Sigmoid Function')

plt.legend()

plt.grid(True, ls='--', alpha=0.5)

plt.tight_layout()

plt.show()